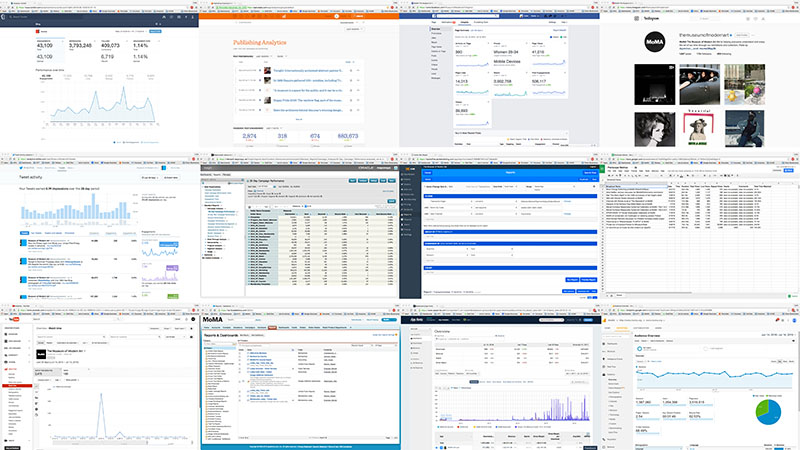

Interning with MoMA’s Digital Media department is great. Building a dashboard to track metrics across the museum is really complicated. Both of these statements are true, which is the kind of authoritative certainty I strive for in data analytics. This summer my job has been to create a dynamic dashboard that pulls in data from sources all over MoMA, that ideally updates automatically.

On its face, building a dashboard might sound like a straightforward task, composed of a) collecting the data, and b) visualizing it. But in practice it’s been much more complicated, requiring a significant amount of both human and technical collaboration. Figuring out where various metrics are coming from, if they are what they should be, and how to wrangle them into a dashboard requires answering a lot of questions with often imperfect solutions.

Part 1: Data audit — “What’s out there?”

Being new and legitimately having no idea how MoMA kept track of anything, there was no reasonable way to start this project without first talking to the people who knew their data best. MoMA had already identified the types of metrics it wanted to track, but the nitty gritty of how to generate them accurately and efficiently was something I needed to sort out. I began by sitting down with individuals from different departments and asking about what metrics they were interested in, what services they used, what their workflow looked like, what things worked well, and what things they’d like to do differently.

Having these conversations with people from retail, social media, email marketing, membership, management information, IT, collections technology, and others, was vital to the project for two primary reasons:

- There would be no technical way to create the metrics I needed without knowing where they had to come from.

- Without these conversations I wouldn’t be able to even start to answer the sorts of questions that have come up constantly throughout this project, and I’d be sending a dozen emails a day asking how numbers were calculated.

What those early conversations allowed me to do was be more efficient when I did have to bother people. They also meant that I had a better idea of what was possible to track, and critically at what point in the data-flow I should try to track from.

By the way, when you think you’re done you’re with this you’re not: something else will always come up.

Phase 2: Get the data — or SaaS (Spreadsheets as a Solution)

Of course the obvious question for all of this is how do you get data into the dashboard in the first place? How do you marshal it all together in a consistent and interoperable enough form where that’s possible? It’s a lot easier said than done when you start reaching dozens of separate services, and feel your brain start to melt thinking about how to combine your Instagram, Tumblr, Twitter, Facebook, YouTube, Periscope, and email subscriber engagements segmented by month, post, impression, or number of followers.

“Data” is great, but the practice of getting it cleanly, consistently, and quickly for multiple people can be a real headache when it’s spread so widely. This is part of the impetus for MoMA to build a unified data-dashboard. To create a single place where KPIs and other important metrics can be observed by multiple people, over time. Where there is enough information to be useful at a high level even if it isn’t necessarily the absolute truth, and the reasons for any inaccuracies are clearly documented.

It’s important to point out that this has been by far the longest step in the process. The state of data on the web in 2016 is one of APIs and a gauntlet of new vendor-specific dashboards you need to login to once a month, remind yourself where they hid the export button and date selection, and dump the data to a spreadsheet. For a place like MoMA this presents a kind of sweet spot of organizational difficulty: enough data and the savvy to utilize it within its channel, but enough distinct data sources that it becomes a monumental task to assemble everything into a bigger aggregated picture of what’s going on.

It’s great that (in a vacuum) so many of the museum’s data sources provide access to their content or analytics online. But as the number of services MoMA and other similar institutions use continues to creep upwards, these sorts of silo’ed check-each-service solutions start becoming really unwieldy.

I think it can be tempting, having reached this point, to do one of the following:

- Buy a service that sells itself on integrating all your other services. This will inevitably pose its own complications, and will require yet another service in the future to better integrate the integrating services.

- Develop something in-house that will require highly technical (and expensive) maintenance for the duration of its existence.

- Say screw it to the whole thing and stick with your current inefficient workflow.

What I’ve tried to do with MoMA is split the difference between those choices, hopefully taking some of the benefits of each.

In my mind the core problem in sharing data across any like-minded medium-sized institution is making the data available at a level that is accessible from a range of technical perspectives. And I mean that both with respect to the technologies themselves and the expertise of the people utilizing them. It might be great to break your social media data out in a SQL database, or to say hey, here’s the API keys, have at it. But that doesn’t work for everyone’s level of technical know-how, even if it might work with your Tableau or Qlik integration. Building something that will last requires building something that can evolve alongside needs and expectations. So rather than focus on creating the perfect dashboard, I’ve worked to get MoMA’s data feeding into spreadsheets.

That may sound a little antiquated, but the reality is that spreadsheets are already in just about everyone’s workflow who deals with data. Getting data into Google Sheets means that nearly anyone can do something with it, from charting within the sheet, to pivot tabling it somewhere else, to importing it into just about any other data-dealing software imaginable (including in MoMA’s case, Tableau). However, what makes Google Sheets in particular an appropriate tool for building consistency across sources, is Google Apps Script.

Google Apps Script allows you with minimal fuss to write JavaScript that interacts with a corresponding Google Sheet. In turn that allows for the possibility of connecting to the APIs of the various data sources you’re trying to do something with. For MoMA that means things like our ticketing system, email service, social media channels, and our app downloads tracking service among others can all be setup such that each night a Google Apps Script goes out to each API, requests data from the previous day, and then inserts that back into a Google Sheet. And with Google Apps Script triggers, that happens entirely automatically with no human input. From there it can be brought in to visualization software like Tableau or Google Data Studio, or simply shared to someone else and they can do whatever they in turn want to do with it.

Admittedly, “here’s the solution, just write some JavaScript and couple it all to a dashboard in Tableau” is itself fairly technical, but on the scale of what it could be I think this is reasonable. Google provides sample code for a variety of services that without too much trouble that can be massaged into connections to others. And on a personal level, I think the reality is that as more of the systems we interact with shift to RESTful APIs this kind of institutional knowledge will have to grow.

What this sort of middle ground of technical automation and integration allows for is an easy source of consistent data. With proper documentation, this opens up metrics to more people without having to deal with access to the specific services themselves, and allows for them to answer more of their own data questions. The technical cost is in setting up the API connections in Google Apps Script, but once that’s done there should be much less upkeep necessary than for a more end-to-end from-source-to-viz solution.

TL;DR

The vast majority of the work in building a dashboard for MoMA’s digital properties has been in figuring out why the numbers are what they are, and how to get them into a consistent and useable format. Updating them automatically on a schedule has been icing on the cake. This is a project that has taken me across the museum and a good chunk of the internet. And it’s become abundantly clear that there is no substitute for personal conversations.

I’ll end with a few top tips for anyone undertaking a similar journey through data:

- Talk to people.

- Don’t assume you’ll remember what someone patiently explained to you; please write it down.

- Accept imperfection. Lots of it.

And if I could give those tips a second time, I would do that too.