“I’m currently on an oceanographic research vessel somewhere in the Pacific, and may be slower than usual to reply.”

Memo and Katie’s out of office, while working on this project.

“I’m currently on an oceanographic research vessel somewhere in the Pacific, and may be slower than usual to reply.”

Memo and Katie’s out of office, while working on this project.

I feel doomed (complimentary) to write these posts every few years about rebuilding an online collection. Maybe that’s because they’re always glued together, always better than what came before but simultaneously still a messy bridge between systems. This is by my count the 3rd major new online collection I’ve worked on for the Whitney, on top of an untold number of smaller tweaks and rewrites. Sometimes there’s big new features this enables (see: portfolios, high resolution images, exhibition relationships), and sometimes, like this time, it’s quieter (see: better colors in images, verso titles). I think it’s important to acknowledge this work while also understanding that it is the kind of non-flashy, incremental improvements that are difficult to grab attention for, and despite the evidence hard to always write about.

What we replaced

The last online collection for the Whitney was based on syncing with the eMuseum API, which was connected through a tool called Prism to TMS (The Museum System), our collection database of record. While this was a boon at the time as it allowed us to skip having to figure out the tricky step of how to export data out of TMS and get it online, it was also a drag in that data syncing was brittle and slow. Propagating changes meant syncing each of potentially hundreds or thousands of records over REST APIs each night, and deletions required a long tail of checking whether records not seen in a while had disappeared and should therefore be removed. Getting the difference of “what changed” existed on a spectrum of possible-but-buggy to impossible. At the same time every asset and field had to go through transformations between TMS > Prism > eMuseum > whitney.org, which meant all manner of formatting errors for text or compression issues for images could crop up between each step. It worked, but it had too much middle management.

What we use now

Rather than run multiple entire applications to export data out of TMS, we’ve moved to a small collection of Python scripts that export data as JSON files and images from our shared drives to a private AWS S3 bucket. This choice follows the example of the Menil Collection’s new online collection (thank you Chad Weinard and Michelle Gude for being so willing to share), who recently did something very similar. This approach has the benefit of letting us adjust the scripts ourselves, and made it possible to have both full and differential outputs. This increased the speed and lowered the complexity of the syncing mechanisms for whitney.org. Updates, additions, and removals are easily recorded in nightly JSON exports, and the whitney.org CMS can quickly check for only the changes it needs to make. And dumping everything in a private S3 bucket is ultimately more secure and easier to manage than more web servers running [often] outdated software.

For me the unlock here was realizing that we had basically already written most of the SQL export logic as part of onboarding eMuseum in the first place, and that parsing what I thought of as gigantic JSON files is actually very performant and practically a non-issue. Maybe this is from some threshold of compute improvements over the last few years, or from faster or more prevalent flash storage, or maybe this was just always possible and I needed the suggestion to do it.

The vibe coding of it all

Whatever the enabler, writing Python scripts to export some nicely formatted JSON files is vastly simpler and more controllable than orchestrating multiple 3rd party applications. It’s also easier than ever: navigating complex SQL selections and parsing strange formatting issues are borderline trivial problems to solve with modern LLMs. Outside of the initial direction and some [thankfully still valuable] domain knowledge about TMS and our collections, Claude could nearly write this on its own. Constrained coding problems like this are so effectively handled by AI that it’s almost dismaying to think about how I would have done this 5 years ago.

Unanticipated improvements

While we started this work due to internal server issues there have been some public-facing improvements I didn’t necessarily expect. For images, removing at least one step of compression in the middle (and potentially an issue with color spaces I never figured out) has led to much better color representation across our collection photography. I didn’t even realize this was an issue until seeing how much richer something like Liza Lou’s Kitchen or Dyani Whitehawk’s Wopila|Lineage looked. In terms of data, in conversations with colleagues it became clear we have hundreds of artworks with reverse sides that weren’t reflected at all on the online collection: neither verso titles, nor images of the verso side, were published. With full control of the data exports it was relatively simple to add these titles to the exports, figure out an appropriate public display, and programmatically add their images. The results can be very cool.

Beyond these changes, there are a number of smaller improvements including:

What next?

I’d like to do something with location-level data for exhibitions and illustrate the history of all the Whitney buildings more effectively. Or build some kind of timeline with all the kinds of content we can “date” now. Or set up something with a public MCP server. Whatever it is I hope it’s not another collection rewrite for a while.

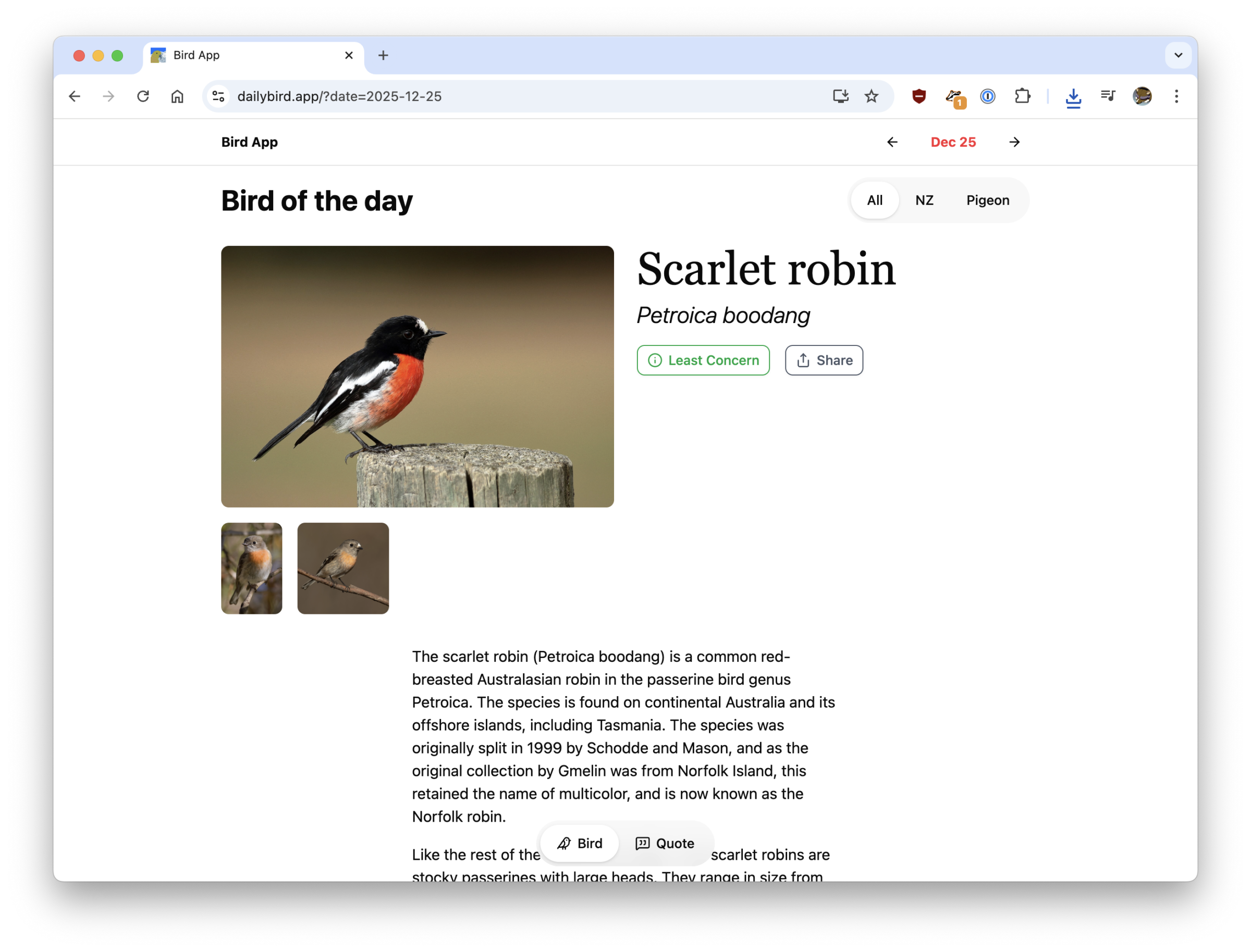

The original Daily Bird app was architected with no back end: each instance of the app would call Wikipedia and Wikidata on its own and save that data locally on the device. I did that because I didn’t want to set up a bunch of infrastructure or sign up with some SaaS option that might be gone in a year. It never really made sense if I wanted to expand things to the web or Android, where it would be extremely helpful to be able to point to a single source of [bird] truth.

It took a while to do, and even longer to post, but I’ve re-architected and renamed Daily Bird to just Bird App (which is what I was calling it anyway), and launched it on web at https://dailybird.app in addition to the existing iOS app.

On the backend this is now a Next.js app with Payload as the content management system. Both of those decisions have already bitten me, with Next.js and React having a nasty CVE in December, and Payload being bought by Figma, but I’m pretending things are fine. With the new web-based Bird App data is simpler to keep consistent between all the instances of the app out there, and I can edit bird entries and add new info much easier. I’ve also added support for multiple images per bird, scientific names, among other small improvements.

Still on the todo list is favorites support on web and a login system to keep track across devices, and new regional categories to filter birds by.

As always, enjoy the birds.

🦆🐦⬛🪿🦩🐓

I never played WoW, but I’ve played this so I’ve kind of played a person playing WoW. It’s a work of interactive nonfiction and fiction, with a full computer desktop, some light gameplay, and a healthy amount of reading.

Video with alpha channels still has very weird support in 2025, which I would categorize as a “bad” surprise. Don’t think about that when you’re watching the newest On the Hour commission though because it’s good, especially the 🐉.

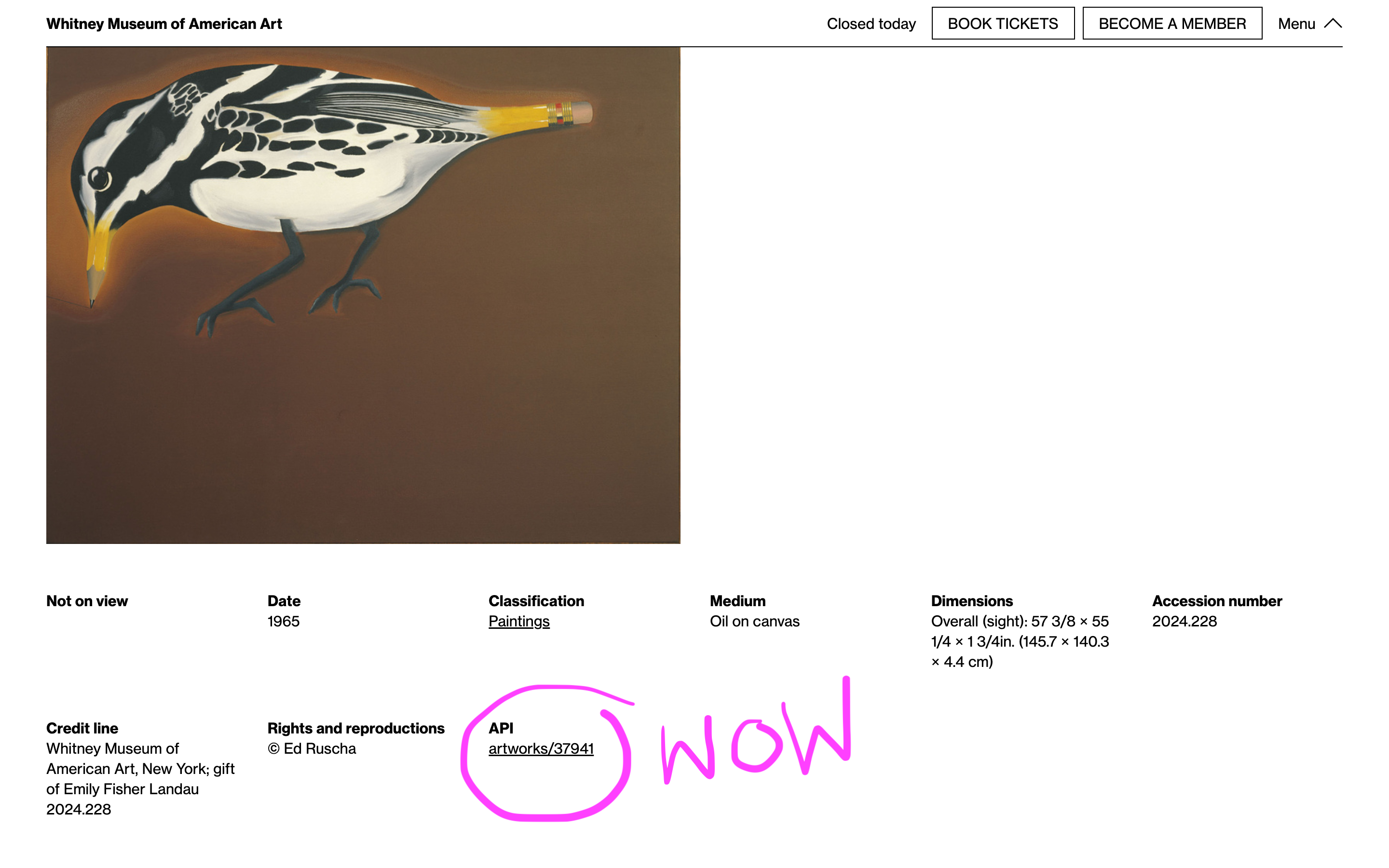

Having an API always made sense for the museum. An API provides structured, always up to date, machine-readable access to our public data that complements our existing flat file Open Access datasets. The challenge was always a) how feature rich does that API need to be and b) can we include enough material to make it useful.

For a long time we’ve had what could probably be considered a semi-complete and semi-useful API for artists, artworks, exhibitions, and events, but I hesitated to do anything to make it very discoverable outside allowing people to stumble upon it if they happened to check whitney.org/api. It always felt like a chicken and egg problem where the API needed documentation to be useful, but I couldn’t write documentation until it had enough features and consistency to be useful, and that never felt pressing because no one was using the API because there was no documentation.

After sitting with this dilemma I decided to try and start making what seemed like the most accessible parts of the API more discoverable, in the hopes of gradually juicing usage to the point where it would be more justifiable to spend more time improving it. Given the number of other museums with APIs focused around their collection, I thought that adding visible callouts for API permalinks (taking inspiration from the Getty in this case) for artist and artwork pages on the site could start to get people who tend to notice and utilize these kinds of tools interested in what we might have to offer.

Around the same time I added some basic internal analytics to usage of the API, to keep tabs on monthly usage. Partly for curiosity and partly because we have no API key requirements or rate limits at the moment and it seemed responsible.

Over the last couple of years we’ve gradually added more links, and recently some actual documentation combined with improvements to how the API works and what’s included in different endpoints. During that period public usage (whether by people or bots) has gradually grown from a few thousand requests a month to 200–300k. It’s also moved from being 1 or 2 requests on every individual record, to more varied distribution across artists and artworks, better reflecting their popularity.

Usage of the API continues to trend upward, and I hope that as we have more uses for it internally and see increasing use externally we’ll continue to refine and expand its functionality.

If you’ve used the Whitney API at all or have thoughts about things we could add or change, please reach out, I’d love to know what you’re doing with it.

Between Sapponckanikan and this project with Ashley Zelinskie I do Unity now, sort of. Twin Quasar explores gravitational lensing and two works in the museum’s collection, and can be viewed on MONA, whitney.org, and an app on Apple Vision Pro. I mostly worked on prepping the archival version.

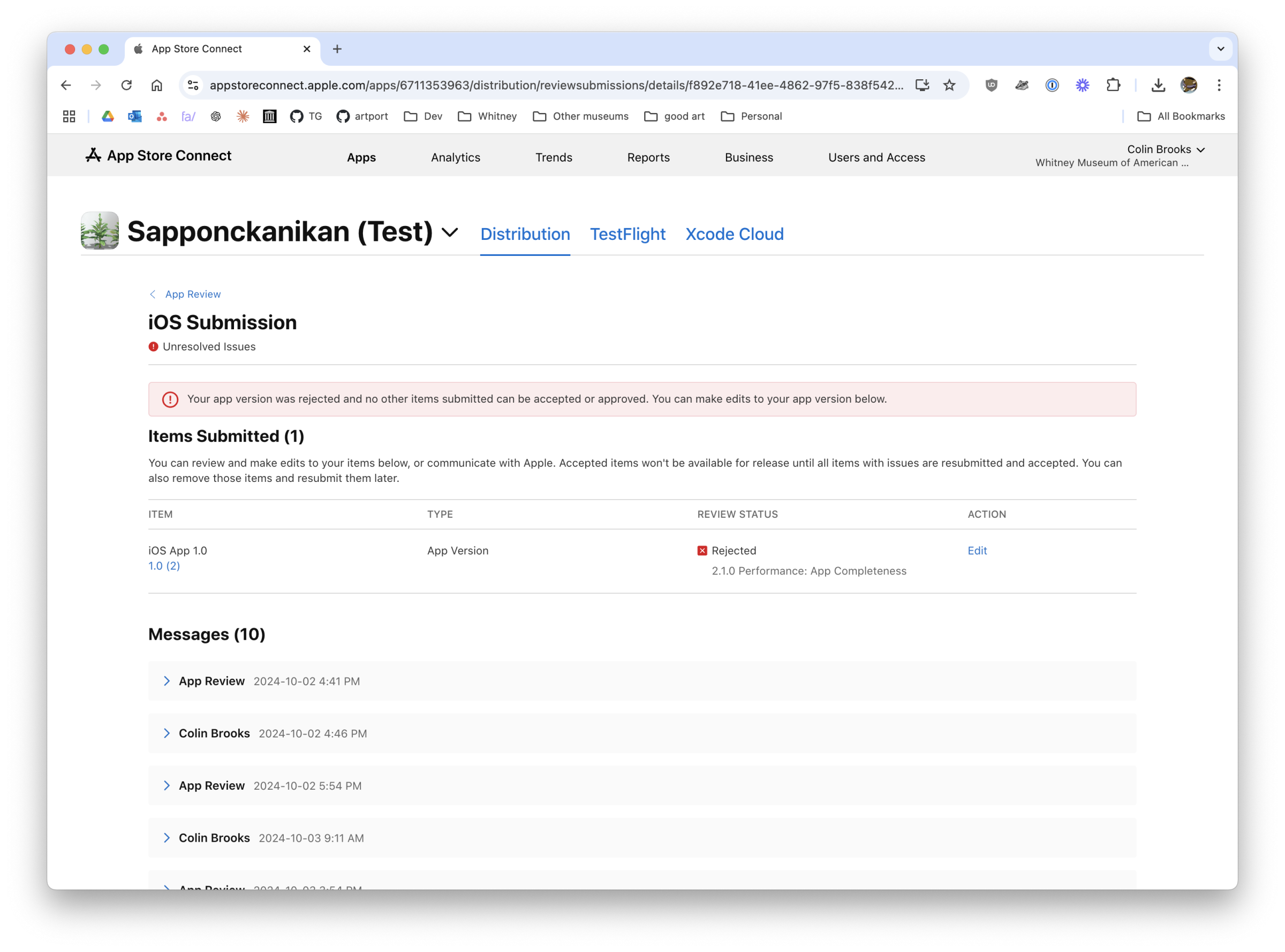

A couple months ago I had the pleasure or burden of releasing either the first apps ever, or the first apps in the last decade for the Whitney Museum. Working with Steven Fragale we revived Sapponckanikan, an app he and Alan Michelson released and first showed at the Whitney in 2019 as part of Wolf Nation.

Sapponckanikan is an augmented reality (AR) work that overlays the native strain of tobacco plants that the Lenape originally planted on the island that would become Manhattan on top of the viewer’s camera feed in the Museum lobby. The app was built with Unity, and the plants modeled after references from Alan’s sister’s garden.

By 2024 the version of the app from 2019, built from Unity and submitted to the Apple and Android app stores from Steven’s own accounts, was no longer publicly available. Privately, locally, the app would not build for current phone operating systems (OS’s) at all, and Steven had to rewrite the app multiple times—at least once for all the changes to ARKit, ARCore, and Vuforia (a common SDK for AR experiences), and at least once more again for the transition from Intel to Apple Silicon breaking shadow rendering.

That an app released for a show that ended in 2020 was already off the app stores and unbuildable by early 2024 was a bit shocking, even to someone (me) who already thought native apps decay incredibly quickly. I was expecting there to be some amount of platform drift like, we’d have to re-sign some accounts or app files, and maybe tweak a few settings for deprecated bits of iOS and Android, not having to effectively re-code the app twice and even then still run into new roadblocks.

One of the things that still feels the strangest to me about this entire process was that even when we got the app working on both iOS and Android, both app stores denied our initial submissions for release, for reasons that lie somewhere on the spectrum from perplexing to infuriating. For Google the issues were relatively straightforward, requiring extra safety language built in for warning children that they should have an adult present and pay attention to their surroundings. That seems slightly overbearing to me, but fine, sure, though I’ll point out that those sorts of changes required creating new assets in Unity and laying out new elements which is not nothing.

On the other hand Apple just was not having it.

Initially apple blocked submission of the app on the grounds that it had “Minimum functionality” (Guideline 4.2.1). Most of the 10 back and forth messages I had with my app reviewer was over this, though helpfully they later added Guideline 2.1 Information Needed, asking for a video that demonstrates the app on actual hardware, before rescinding that additional requirement during appeal.

If this was something like a todo list or budgeting app I could understand the need to make sure there’s enough basic functionality in the app to make it reasonable for it to be an app in the first place (though my bird app was also caught by this), but at the risk of stating the obvious this one is art, and I can confidently say I don’t know what the “minimum functionality” should be for an artwork. I attempted to plead this case through 10 messages without any luck, and honestly figured this just wasn’t going to be releasable on iOS as an app, and would have to wait for whatever far off day mobile Safari will gain meaningful WebXR support.

![A possum with it's mouth open with the caption, Your art does not meet Guideline 4.2.1 Minimum Functionality, followed by [Screaming beings].](https://colinbrooks.com/wp-content/uploads/2025/01/possum_rejection.jpg)

Thanks to Reddit I had run across the suggestion that sometimes it’s worth just renaming the project and resubmitting to get a new app reviewer, a strategy that makes me slightly nervous even typing out but one that also in our case worked. After submitting a new project Apple promptly approved Sapponckanikan, and it entered the world anew.

It’s worth noting that this process took a few weeks, every day of which brought us closer to the opening of the show that the app was supposed to be in and available for the public to download, and increased my own anxiety about the project. I’m sympathetic to how much garbage must be submitted to the app stores every day that Apple and Google must have to wade through, but at the same time it’s pretty disheartening that artist’s experimentation with new technologies like AR are so limited by what’s able to get approved in the app stores. And that doesn’t touch on the barriers like having developer accounts (not seen, our original Apple account getting banned for reasons I still don’t understand), paying any developer fees, validating who you are (I got to see the Whitney’s water bill for the first time, our official documentation for our organization), learning how to archive and sign the apps, posting privacy policies online, etc etc etc.. There’s a lot in a public release of a project even if only a few hundred people might ultimately use it. Next to all this is the web, a platform that for all its faults, basically lets you post anything you want and if you don’t need to touch it again run forever in many cases.

Sapponckanikan was a good learning experience for me, I’m sure Steven and Alan, and I imagine others throughout the museum as we figured out the process to have a more institutional presence on the app stores. At the same time and more surprising than not, it reinforced my own belief that the web is a really great place for artists in a way that the more locked down app stores are not, even if technically they can offer bleeding edge features that the web only comes to later. For $5 you can have a VPS or for mostly no dollars Github Pages or Cloudflare Pages or any number of other free static hosts, and if you lean on well supported native web technologies like HTML, CSS, JS, or even PHP or Flash if this was 10 years ago (thanks Ruffle), then you’ll almost without question outlast any native app. Everything decays eventually, but the time horizon on web apps that don’t do anything too outlandish or depend on content management systems or big external dependencies looks a lot farther off than anything I’ve written in Xcode.

Check out Sapponckanikan on iOS or Android, and hopefully eventually the web.

I’ve watched a billion hours of TikTok but unlike Maya Man I have nothing to show for it, who I worked with for the first true On the Hour commission for whitney.org.